最新下载

热门教程

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

linux中RH236 glusterfs存储配置的例子

时间:2016-03-10 编辑:简简单单 来源:一聚教程网

| 主机名 | IP地址 | |

| node1 | server2-a.example.com | 172.25.2.10 |

| node2 | server2-b.example.com | 172.25.2.11 |

| node3 | server2-c.example.com | 172.25.2.12 |

| node4 | server2-d.example.com | 172.25.2.13 |

| node5 | server2-e.example.com | 172.25.2.14 |

- [root@server2-a ~]# gluster

- gluster> peer probe 172.25.2.10

- peer probe: success. Probe on localhost not needed

- gluster> peer probe 172.25.2.11

- peer probe: success.

- gluster> peer probe 172.25.2.12

- peer probe: success.

- gluster> peer probe 172.25.2.13

- peer probe: success.

- gluster>

[root@server2-a ~]# mkdir -p /bricks/test

[root@server2-a ~]# mkdir -p /bricks/data

[root@server2-a ~]# vgs

VG #PV #LV #SN Attr VSize VFree

vg_bricks 1 0 0 wz--n- 14.59g 14.59g

[root@server2-a ~]# lvcreate -L 13G -T vg_bricks/brickspool

Logical volume "lvol0" created

Logical volume "brickspool" created

[root@server2-a ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_a1

Logical volume "brick_a1" created

[root@server2-a ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_a2

Logical volume "brick_a2" created

[root@server2-a ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_a1

[root@server2-a ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_a2

[root@server2-a ~]# cat /etc/fstab |grep -v \#

UUID=0cad9910-91e8-4889-8764-fab83b8497b9 / ext4 defaults 1 1

UUID=661c5335-6d03-4a7b-a473-a842b833f995 /boot ext4 defaults 1 2

UUID=b37f001b-5ef3-4589-b813-c7c26b4ac2af swap swap defaults 0 0

tmpfs /dev/shm tmpfs defaults 0 0

devpts /dev/pts devpts gid=5,mode=620 0 0

sysfs /sys sysfs defaults 0 0

proc /proc proc defaults 0 0

/dev/vg_bricks/brick_a1 /bricks/test/ xfs defaults 0 0

/dev/vg_bricks/brick_a2 /bricks/data/ xfs defaults 0 0

[root@server2-a ~]# mount -a

[root@server2-a ~]# df -h

[root@server2-a ~]# mkdir -p /bricks/test/testvol_n1

[root@server2-a ~]# mkdir -p /bricks/data/datavol_n1

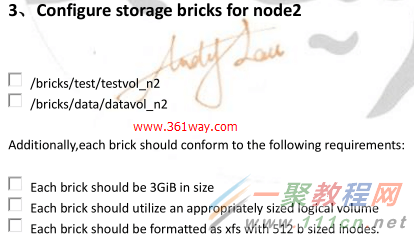

[root@server2-b ~]# mkdir -p /bricks/test

[root@server2-b ~]# mkdir -p /bricks/data

[root@server2-b ~]# lvcreate -L 13G -T vg_bricks/brickspool

[root@server2-b ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_b1

[root@server2-b ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_b2

[root@server2-b ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_b1

[root@server2-b ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_b2

/dev/vg_bricks/brick_b1 /bricks/test/ xfs defaults 0 0

/dev/vg_bricks/brick_b2 /bricks/data/ xfs defaults 0 0

[root@server2-b ~]# mount -a

[root@server2-b ~]# df -h

[root@server2-b ~]# mkdir -p /bricks/test/testvol_n2

[root@server2-b ~]# mkdir -p /bricks/data/datavol_n2

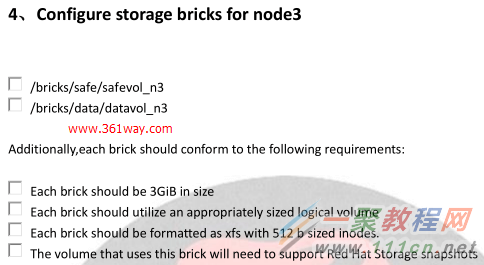

[root@server2-c ~]# mkdir -p /bricks/safe

[root@server2-c ~]# mkdir -p /bricks/data

[root@server2-c ~]# lvcreate -L 13G -T vg_bricks/brickspool

[root@server2-c ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_c1

[root@server2-c ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_c2

[root@server2-c ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_c1

[root@server2-c ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_c2

/dev/vg_bricks/brick_c1 /bricks/safe/ xfs defaults 0 0

/dev/vg_bricks/brick_c2 /bricks/data/ xfs defaults 0 0

[root@server2-c ~]# mount -a

[root@server2-c ~]# df -h

[root@server2-c ~]# mkdir -p /bricks/safe/safevol_n3

[root@server2-c ~]# mkdir -p /bricks/data/datavol_n3

bricks-rep

[root@server2-d ~]# mkdir -p /bricks/safe

[root@server2-d ~]# mkdir -p /bricks/data

[root@server2-d ~]# lvcreate -L 13G -T vg_bricks/brickspool

[root@server2-d ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_d1

[root@server2-d ~]# lvcreate -V 3G -T vg_bricks/brickspool -n brick_d2

[root@server2-d ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_d1

[root@server2-d ~]# mkfs.xfs -i size=512 /dev/vg_bricks/brick_d2

/dev/vg_bricks/brick_d1 /bricks/safe/ xfs defaults 0 0

/dev/vg_bricks/brick_d2 /bricks/data/ xfs defaults 0 0

[root@server2-d ~]# mount -a

[root@server2-d ~]# df -h

[root@server2-d ~]# mkdir -p /bricks/safe/safevol_n4

[root@server2-d ~]# mkdir -p /bricks/data/datavol_n4

[root@server2-a ~]# gluster

gluster> volume create testvol 172.25.2.10:/bricks/test/testvol_n1 172.25.2.11:/bricks/test/testvol_n2

volume create: testvol: success: please start the volume to access data

gluster> volume start testvol

volume start: testvol: success

gluster> volume set testvol auth.allow 172.25.2.*

gluster> volume list

gluster> volume info testvol

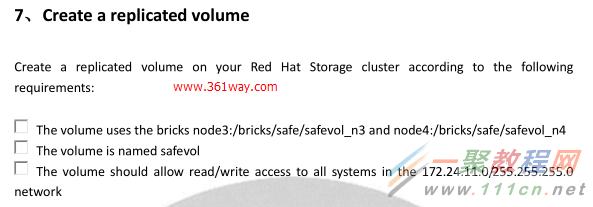

[root@server2-a ~]# gluster

gluster> volume create safevol replica 2 172.25.2.12:/bricks/safe/safevol_n3 172.25.2.13:/bricks/safe/safevol_n4

gluster> volume start safevol

gluster> volume set testvol auth.allow 172.25.2.*

gluster> volume list

gluster> volume info safevol

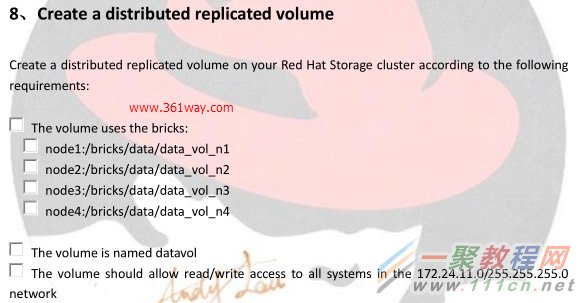

[root@server2-a ~]# gluster

gluster> volume create datavol replica 2 172.25.2.10:/bricks/data/datavol_n4 172.25.2.11:/bricks/data/datavol_n4 172.25.2.12:/bricks/data/datavol_n4 172.25.2.13:/bricks/data/datavol_n4

gluster> volume start datavol

gluster> volume set testvol auth.allow 172.25.2.*

gluster> volume list

gluster> volume info datavol

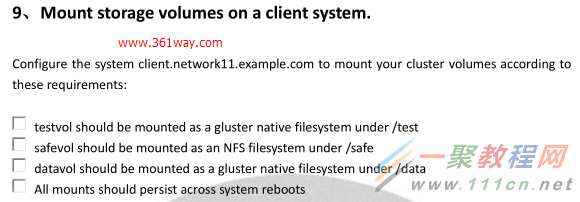

mount-glusterfs

[root@server2-e ~]# cat /etc/fstab |grep -v \#

UUID=0cad9910-91e8-4889-8764-fab83b8497b9 / ext4 defaults 1 1

UUID=661c5335-6d03-4a7b-a473-a842b833f995 /boot ext4 defaults 1 2

UUID=b37f001b-5ef3-4589-b813-c7c26b4ac2af swap swap defaults 0 0

tmpfs /dev/shm tmpfs defaults 0 0

devpts /dev/pts devpts gid=5,mode=620 0 0

sysfs /sys sysfs defaults 0 0

proc /proc proc defaults 0 0

172.25.2.10:/testvol /test glusterfs _netdev,acl 0 0

172.25.2.10:/safevol /safe nfs _netdev 0 0

172.25.2.10:/datavol /data glusterfs _netdev 0 0

[root@server2-e ~]# mkdir /test /safe /data

[root@server2-e ~]# mount -a

[root@server2-e ~]# df -h

[root@server2-e ~]# mkdir -p /test/confidential

[root@server2-e ~]# groupadd admins

[root@server2-e ~]# chgrp admins /test/confidential/

[root@server2-e ~]# useradd suresh

[root@server2-e ~]# cd /test/

[root@server2-e test]# setfacl -m u:suresh:rwx /test/confidential/

[root@server2-e test]# setfacl -m d:u:suresh:rwx /test/confidential/

[root@server2-e test]# useradd anita

[root@server2-e test]# setfacl -m u:anita:rx /test/confidential/

[root@server2-e test]# setfacl -m d:u:anita:rx /test/confidential/

[root@server2-e test]# chmod o-rx /test/confidential/

glusterfs-quota

[root@server2-e ~]# mkdir -p /safe/mailspool

[root@server2-a ~]# gluster

gluster> volume

gluster> volume quota safevol enable

gluster> volume quota safevol limit-usage /mailspool 192MB

gluster> volume quota safevol list

[root@server2-e ~]# chmod o+w /safe/mailspool

Geo-replication

[root@server2-e ~]# lvcreate -L 13G -T vg_bricks/brickspool

[root@server2-e ~]# lvcreate -V 8G -T vg_bricks/brickspool -n slavebrick1

[root@server2-e ~]# mkfs.xfs -i size=512 /dev/vg_bricks/slavebrick1

[root@server2-e ~]# mkdir -p /bricks/slavebrick1

[root@server2-e ~]# vim /etc/fstab

/dev/vg_bricks/slavebrick1 /bricks/slavebrick1 xfs defaults 0 0

[root@server2-e ~]# mount -a

[root@server2-e ~]# mkdir -p /bricks/slavebrick1/brick

[root@server2-e ~]# gluster volume create testrep 172.25.2.14:/bricks/slavebrick1/brick/

[root@server2-e ~]# gluster volume start testrep

[root@server2-e ~]# groupadd repgrp

[root@server2-e ~]# useradd georep -G repgrp

[root@server2-e ~]# passwd georep

[root@server2-e ~]# mkdir -p /var/mountbroker-root

[root@server2-e ~]# chmod 0711 /var/mountbroker-root/

[root@server2-e ~]# cat /etc/glusterfs/glusterd.vol

volume management

type mgmt/glusterd

option working-directory /var/lib/glusterd

option transport-type socket,rdma

option transport.socket.keepalive-time 10

option transport.socket.keepalive-interval 2

option transport.socket.read-fail-log off

option ping-timeout 0

# option base-port 49152

option rpc-auth-allow-insecure on

option mountbroker-root /var/mountbroker-root/

option mountbroker-geo-replication.georep testrep

option geo-replication-log-group repgrp

end-volume

[root@server2-e ~]# /etc/init.d/glusterd restart

[root@server2-a ~]# ssh-keygen

[root@server2-a ~]# ssh-copy-id georep@172.25.2.14

[root@server2-a ~]# ssh georep@172.25.2.14

[root@server2-a ~]# gluster system:: execute gsec_create

[root@server2-a ~]# gluster volume geo-replication testvol georep@172.25.2.14::testrep create push-pem

[root@server2-e ~]# sh /usr/libexec/glusterfs/set_geo_rep_pem_keys.sh georep testvol testrep

[root@server2-a ~]# gluster volume geo-replication testvol georep@172.25.2.14::testrep start

[root@server2-a ~]# gluster volume geo-replication testvol georep@172.25.2.14::testrep status

glusterfs-snapshot

[root@server2-a ~]# gluster snapshot create safe-snap safevol

相关文章

- Linux 配置 DHCP 服务器 简明教程详解 09-08

- linux中srs 身份认证配置例子 08-17

- linux/ubuntu服务器安全问题配置 06-22

- linux中smokeping告警配置教程详解 06-04

- Linux下防御DDOS攻击工具(DDoS deflate)配置使用详解 05-31

- Linux下Storm集群搭建配置教程 04-10